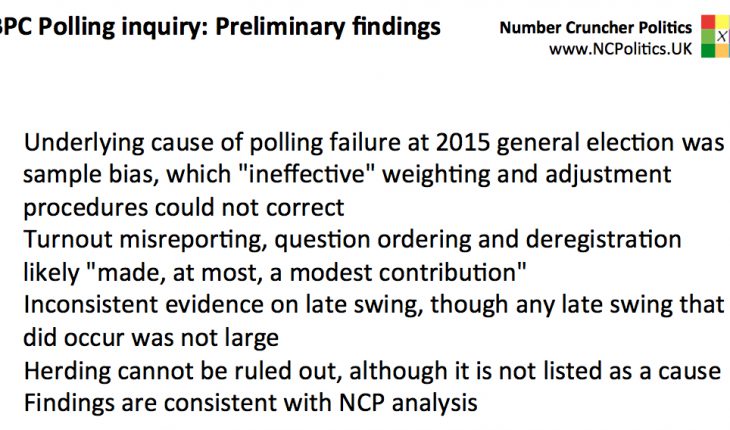

The Sturgis report has now been published. As expected, there are very few changes in the inquiry team’s view since the preliminary findings presented in January – its conclusion remains that the debacle was caused by unrepresentative samples. That conclusion is now fleshed out in considerable detail.

But there are recommendations, quoted below with commentary. Firstly that BPC member pollsters should:

1. include questions during the short campaign to determine whether respondents have already voted by post. Where respondents have already voted by post they should not be asked the likelihood to vote question.

A fairly minor issue in 2015, but sensible and good housekeeping.

2. review existing methods for determining turnout probabilities. Too much reliance is currently placed on self-report questions which require respondents to rate how likely they are to vote, with no strong rationale for allocating a turnout probability to the answer choices.

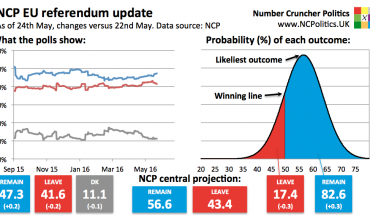

As James Kanagasooriam and I argued in our recent work on EU referendum polling, self-reported likelihood to vote can be highly misleading, particularly far out from elections. Further work is certainly needed in this area

3. review current allocation methods for respondents who say they don’t know, or refuse to disclose which party they intend to vote for. Existing procedures are ad hoc and lack a coherent theoretical rationale. Model-based imputation procedures merit consideration as an alternative to current approaches.

Undecideds and refusers are usually either excluded or reallocated (the so-called shy Tory adjustment). This has tended to work well in elections, but the parameters are indeed arbitrary. The question of what to do in a non-general election situation (such as a referendum) is a further complication.

4. take measures to obtain more representative samples within the weighting

cells they employ.

What it says on the tin. Much easier said than done, but it does need to be done…

5. investigate new quota and weighting variables which are correlated with

propensity to be observed in the poll sample and vote intention.

…and to make sure it is working (and to fill in where it falls short), weighting should attempt the same thing.

The Economic and Social Research Council (ESRC) should:

6. fund a pre as well as a post-election random probability survey as part of the British Election Study in the 2020 election campaign.

This has been done in the past, most recently in 2010 and makes complete sense. It would, for example, be very useful for calibration. The (modest) risk, of course, is that it is wrong. But it is absolutely the right thing to do.

BPC rules should be changed to require members to:

7. state explicitly which variables were used to weight the data, including the population totals weighted to and the source of the population totals.

8. clearly indicate where changes have been made to the statistical adjustment procedures applied to the raw data since the previous published poll. This should include any changes to sample weighting, turnout weighting, and the treatment of Don’t Knows and Refusals.

9. commit, as a condition of membership, to releasing anonymised poll micro-data at the request of the BPC management committee to the Disclosure Sub Committee and any external agents that it appoints.

10. pre-register vote intention polls with the BPC prior to the commencement of fieldwork. This should include basic information about the survey design such as mode of interview, intended sample size, quota and weighting targets, and intended fieldwork dates.

11. provide confidence (or credible) intervals for each separately listed party in their headline share of the vote.

12. provide statistical significance tests for changes in vote shares for all listed parties compared to their

last published poll.

In its response, the BPC said that it would implement disclosure recommendations 7, 8 and 9 immediately – these are aimed squarely at making the processes more transparent, so herding becomes much harder. The BPC said it would allow the next year or so to develop an industry standard for 11 and 12, which are more to do with communication. On the question of pre-registration, the council said it would consider how best to respond (translation: there is considerable resistance to this from its members).

There will also be a BPC followup report, scheduled for 2019.

What is disappointing is while the report notes that weighting and adjustment procedures were unable to correct for the problem of unrepresentative samples, it doesn’t cover the reasons why they failed to do so. The question is this – why, when samples had the right proportions of voters according to how they recalled voting in 2010, did they have the wrong proportions of 2015 voters? I addressed this point here and on Newsnight back in January.

Nevertheless, this third volume in the unhappy trilogy (following similar inquests after 1992 and 1970) is a vital piece of work and will inform the debate about political opinion research in the UK for a long time to come.

Polling inquiry report published

|

30th March 2016 |