Starting with the Liberal Democrats, phone polls have consistently put the level of their support higher than both YouGov and the newer online polls. This is due to methodology – a disproportionate number of 2010 Lib Dem voters were undecided for much of this parliament. Phone polls generally make some allowance for these voters, whereas online polls tend to exclude them. As these “Don’t knows” have made up their minds, the gap in measured Lib Dem support between the two modes has narrowed considerably.

With the combined Green Parties however, the picture is very different. The Greens are perhaps more difficult to poll for than anyone else, because their support has been up to eight times what it was at the 2010 election, making past vote-weighting an extremely blunt tool. The Green surge that started in autumn 2014 was never really reflected in the newer online polls, while both YouGov and the phone polls showed Green support nearly doubling, while tracking one another very closely indeed.

Probably the most pronounced of all the differences have been seen in the level of UKIP support, with a fairly consistent 4-5 point spread between phone pollsters and the newer online polls (which, incidentally, was exactly the margin by which the latter overestimated UKIP’s vote share at the 2014 European Elections). YouGov has typically tracked the phone pollsters much more closely, although there are signs of a divergence when UKIP support is lower, including in recent months.

There has also been an ongoing debate over whether to include UKIP in the main prompt, and at the time of writing polling firms remain divided on the issue. However pollsters in both modes that have introduced UKIP prompting or have run parallel tests have found the impact of the change to be insignificant.

The panel composition is crucial too. Given the additional challenges that pollsters face in the current environment, achieving as representative a sample as possible is more important than ever. In terms of the reasons why panels might differ, it seems that YouGov’s panel is quite different. It is both very large, at around 400,000 UK panelists, and recruitment to it is under YouGov’s complete control. In common with some other online panels, much of the recruitment is via targeted advertising. This makes it less likely that the panel becomes contaminated by activists, and where they do enter the panel, its size dilutes their impact.

It’s worth remembering too that there are substantial differences between individual pollsters (house effects) in addition to these mode effects. Ipsos MORI is the last remaining phone pollster to use what were once called “unadjusted” figures (without political weighting or the spiral of silence adjustment), which explains its recent tendency to show Labour leads. By contrast, Opinium has tended to show the best results for the Conservatives from an online pollster in recent weeks, which again may be related to its methodology.

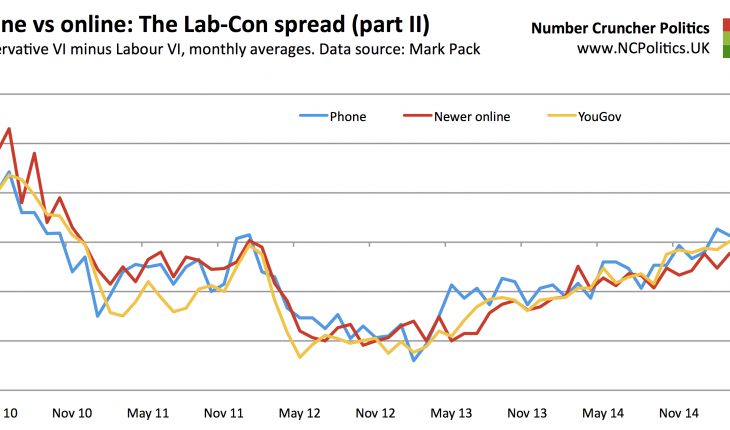

The obvious question is which mode provides the most accurate snapshot of public opinion. One shouldn’t simply assume that more established pollsters are invariably “right” and the others “wrong”. In polling, as in any industry, new entrants can bring fresh thinking and innovation. ICM was a relatively new player when it invented the spiral of silence adjustment. But to the extent that a pollster’s track record is relevant, the data does suggest that the more seasoned polls have looked better for the Conservatives and worse for Labour in recent months. Whether the polls converge over the next fortnight, or one set turns out to be substantially more accurate than the other, remains to be seen.

Note: Figures accurate as of April 22nd.

[1] ICM, Ipsos MORI, Populus (phone)/Lord Ashcroft, ComRes (phone) and YouGov

[2] Opinium, TNS-BMRB, Populus (online), ComRes (online), Survation and Panelbase

Polling divergence – phone versus online and established versus new

|

24th April 2015 |