The release of the latest ComRes online poll for today’s Independent on Sunday and Sunday Mirror certainly got people talking. Its toplines showed a 15 point Conservative lead, the biggest since January 2010, the biggest for the Tories while in government since the Gulf war in 1991, the biggest increase in a Tory government’s lead compared with the prior election result since October 1987 (four months after that year’s election) and a tie with ComRes’s June poll for the lowest Labour vote share while in opposition since September 1983, when Michael Foot was preparing to hand over to Neil Kinnock. All of those stats courtesy of Mark Pack.

ComRes/Indy/Mirror: CON 42 (=) LAB 27 (-2) LIB 7 (=) UKIP 15 (+2) GRN 3 (=) SNP 5 (=) 18th-20th Nov N=2,067 Tabs https://t.co/63CZ77VNoG

— NumbrCrunchrPolitics (@NCPoliticsUK) November 21, 2015

Twitter predictably went overdrive, with some delighted, some claiming vindication and others crying foul. The controversy centres around the turnout model used by ComRes, which was introduced following this year’s election. Some reacted to the poll by flagging up the polling failiure in May (which for some people has become a stock response to any poll they don’t like) while others complained about the ComRes adjustment. In fact some people even tried to spin it both ways, which in effect means criticising pollsters for their inaccuracy the election and criticising them for trying to fix it! I imagine being a pollster is like being a referee – get it right, no-one remembers, get it wrong, no-one forgets.

But the Comres turnout filter is there for a reason – there is overwhelming evidence (see this, this, this, this and this) that people overstate their likelihood to vote, which shouldn’t be a surprise when final polls routinely show over 90% of people saying they will vote, compared with an actual turnout of 66%, and ComRes felt this needed to be adjusted for. Because the overclaim of likelihood to vote isn’t equal between parties, the filter tends to increase the Tory lead by four or five points, which explains a lot of the disparity between ComRes and other houses.

Subsequent information (as discussed in my assessment of why the polls were wrong) suggests that the turnout effect was probably smaller than this, and indeed ComRes made it clear that this was an interim solution. Though if the turnout effect is smaller, the effect of some other bias(es) against the Conservatives (that pollsters aren’t yet correcting for) must have been larger.

Some have commented that ComRes is “out of line” with other pollsters. This is factually correct, but the insinuation is that it’s thereby wrong and the others are right. This isn’t necessarily the case – it’s possible that the ComRes numbers are “wrong” and the other pollsters are “right”, but it’s also possible that the ComRes poll is, in fact, the most accurate reflection of public opinon. Until permanent solutions are implememented we’ll continue to get a wide range of numbers, though arguably that’s no bad thing on its own – we just won’t be entirely sure who’s right.

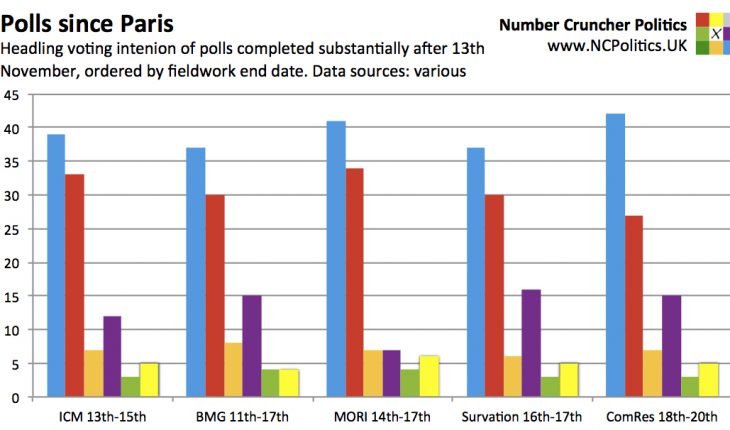

But while polls disagree on levels, they concur a lot more on the trends. As I’ve written for the Times, new leaders tend to give their party a bounce, and we’re coming up to the exact point at which the bounce should be strongest. Also, as we head away from the election and into mid term, governments usually drop back and oppositions usually gain. Yet the early signs of a Corbyn bounce have faded and, post-Paris, Labour has been heading in the opposite direction – all five of the post-Paris surveys have shown an increased Tory lead when compared with the previous poll from the same pollster (by on average just under two points).

There’s also an interesting disparity in terms of leader ratings. Corbyn scores -28 with ComRes and -3 (the least negative of any party leader) with Ipsos MORI. Since these questions are asked before the turnout filters are applied, the difference is due to something else. That something else is likely to be the question itself – ComRes asks respondents whether they have a favourable or unfavourable view, while MORI asks whether they are happy with the way that person is doing their job.

Something that struck me is that 25% of Conservative voters said they were satisfied with how Corbyn was doing his job, while 80% said the same about David Cameron. Therefore at least 5% must have said they were happy with both (and probably more, given the don’t knows). It’s not entirely beyond the bounds of possibility that a number of Tories have an unfavourable view of the Labour leader, but are satisfied with the way he is leading his party for reasons other than the question intended. There’s no evidence for that, and the reason may well be something else, but it is one way the numbers could be consistent…