Britain voted 52-48 to Leave the EU. As I tweeted on Friday, there are many things to analyse in this result, but of the most immediate interest to this site is the performance of the NCP forecast. I’ll be conducting a full review and reporting on it in due course, but there are a number of points to reflect on now.

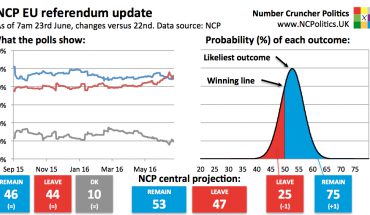

The forecast gave a 25% chance to a vote for Brexit, which was to say that Remain was favourite, not that “Remain will win”. It was certainly not like saying (as most did in May 2015) that the chances of a Conservative majority were essentially zero, only for it to happen. Nor was comparable to saying that Donald Trump had no chance (or a negligible chance) of the Republican nomination, which he eventually secured. The model built in plenty of uncertainty, and 25% represented a real possibility of Brexit. My public comments all along have reflected this.

That said, I’m not going to pretend to be happy with a central forecast as far from the result as it was. Considering the three steps of the forecast – aggregating the polls, estimating true support for each side and projecting the evolution of support between polling and voting – the source of the error is already fairly clear.

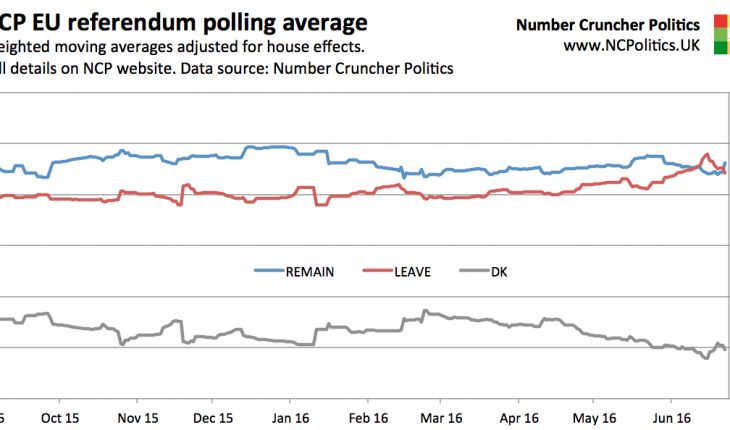

It has been claimed that swingback to the status quo – often seen in referendums and factored in to the model – was illusory, didn’t happen this time, or even happened in reverse. In fact the evidence is that it did occur, with Remain gaining ground across polls in the final 10 days and then to an even greater extent on the day itself than the model had predicted. Both published “on the day” polls showed Remain gaining, by 1 to 2 points. Even when polls are wrong in terms of levels, we can be still be pretty confident that they got the direction of the changes right.

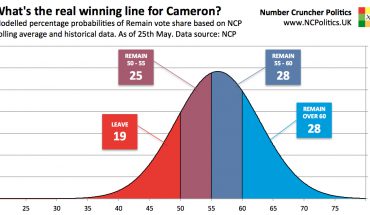

The final polls ranged between Leave+2 and Remain+10, with two of the eight British Polling Council members that published polls in the final four days putting Leave narrowly ahead. The NCP polling average, excluding undecided voters, overestimated the Remain share by 3.3 points – less than a straight average (see the BPC statement). There is no sensible way of averaging the final polls that would have put Leave ahead, and indeed no polling average that I’m aware of did so in its final figures.

So that leaves the middle step – estimating the true support for each side – as the prime suspect. In other words, not predicting that the average of polls would turn out to have indicated the wrong winner.

Last year this site correctly predicted the polling failure of a generation, something that many people thought wasn’t possible. I’ve always been clear that the referendum would be a much bigger test both for forecasters and pollsters. For example, I said in a couple of interviews around the turn of the year that this call – of a unique event – would be significantly harder, and there was a strong consensus across the psephologists and practitioners that this was the case. I repeated the warning earlier this month.

Therefore, to have called Brexit as the likeliest outcome based on the data available would have been significantly harder than getting last year right, and unfortunately I didn’t manage to do so this time. Given the significance of the EU referendum, this was an unfortunate first call to get wrong.

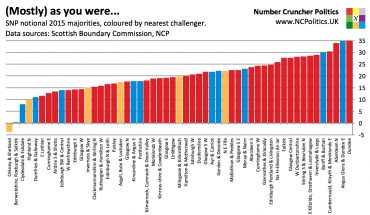

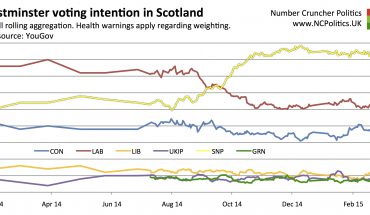

However NCP’s track record remains among the very best, having made correct calls on polling accuracy against the consensus at the 2015 general election and Labour leadership elections and on the results of the recent devolved elections. Nevertheless, all relevant lessons will be learnt, as has been the case after every previous prediction.

Finally, given the customary lack of recognition for those that actually get it right, let me congratulate TNS, Opinium, Stephen Bush, Rob Hayward and everyone else that called it. I’ll be publishing further analysis in the near future.

Referendum reflection

|

26th June 2016 |