Why the risks are particularly high this time

Opinion polls always run the risk of failure – it is delusional to think that systematic bias was something that happened in the past and can’t reappear. That’s why pollsters continually work to manage it. If people were to read one FAQ from the British Polling Council website, it would be this one concerning the margin of error:

You say those calculations apply to “a random poll with a 100% response rate”. Surely that’s pie in the sky?

Fair point. Many polls are non-random, and response rates are often very much lower — well below 50% in many countries for polls conducted over just a few days.

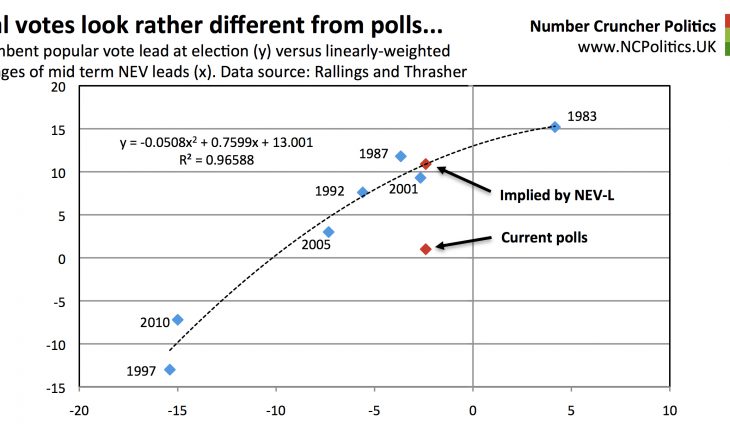

But sometimes the risk is greater than others. New techniques in the last 20 years, notably political weighting, have helped pollsters avoid a repeat of 1992 (so far). But no technique is infallible, and the current political climate may have blunted the effectiveness of some of them.

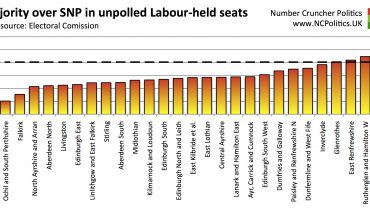

Political weighting in particular has generally worked well in a stable political system, by ensuring that a weighted sample contains – in addition to all the demographic proportions – representative numbers of those who identify with each party, or voted for it at the last election, typically with an adjustment for false recall (that is, people reporting their past vote incorrectly). But more complex patterns are problematic – for example, a pollster can ensure that a sample contains the correct number of 2010 Lib Dems. But what if (as is likely) 2010 Lib Dems defecting to one party are very different from those going to another, or to the “don’t know” column, and have differential response rates?

.@GideonSkinner: This election much more complicated: Not about Lab/Cons swing any more #GE2015 pic.twitter.com/rfgxJWL7qf

— Ipsos MORI (@IpsosMORI) April 30, 2015

The same problem potentially arises in reverse with UKIP – the party has taken support from most other parties, but if each party’s defectors to UKIP behave differentially to its loyal 2010 voters, UKIP support may be over- or underestimated. Worse, as far as the big picture is concerned, if the defector versus loyalist behavioural differences are themselves different between parties (in other words, the composition of UKIP support is mismeasured), they directly impact on estimates of support for other parties.

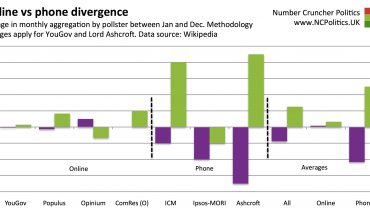

Some pollsters have gone on record to express their concerns, including Andy White of ComRes and Keiran Pedley from exit Pollster GfK NOP. Others have conceded privately that they are unusually concerned about this election. A slightly troubling observation is the divergence between modes of polling, or perhaps between pollsters of varying longevity. Some have suggested is that online polling should have negated the ‘shyness’ problem because of the anonymity of a computer, vis-a-vis telephone or (rarely, nowadays) face-to-face polling. Intuitively this makes sense, but the numbers haven’t (yet) borne the theory out.

Polling using online panels, even if they overcome one problem, potentially creates new ones. Unlike telephone polling, online panels are not quasi-random samples, but statistical models based on non-random underlying data. Panelists have to be recruited, often by responding to an advert online, then complete a signup process, and for each poll, accept an invitation to complete a survey. At every stage there is potential for self-selection or differential response. Weighting the raw data evens out the bias to the extent that bias correlates with the factors used for weighting. Some pollsters even have sophisticated algorithms to identify potential ‘panel stuffers’ (activists posing as run-of-the-mill voters). But if there exists a residual, systematic bias among genuine respondents, weighting and filtering would not remove it.

In respect of phone poll methodology some have also suggested the ‘spiral of silence’ adjustment, pioneered by ICM, fixed the problem once and for all. It hasn’t, and I don’t recall ICM ever claiming that it had. Reallocating a portion of “don’t knows” and refusers to the party they voted for previously makes complete sense, and ICM’s performance has led to its reputation as a ‘gold standard’. But this adjustment only deals with one type of silence. It deals with people who answer the phone, don’t hang up on the pollster but agree to answer some pretty personal questions, say they are likely to vote if an election were held tomorrow but claim not to know who for and disclose who they voted for last time. It can’t deal directly with issues elsewhere, even though it may be a good proxy for them.

We also know that phone response rates are poor. In the US response rates are often barely into double digits – in the UK they are higher, but not hugely so. And contrary to some claims, pollsters do not just “bump up the number of Tories”. They apply adjustments, which have tended to increase reported levels of Conservative support. This has sometimes led those it doesn’t suit to cry foul or report unweighted numbers. Were it not for weighting, the charts above would look considerably worse.

(Continued, please navigate using numbered tabs…)